In my original post, Scaling up Virtual Machines in vSphere to meet performance requirements, I described a unique need for the Software Development Team to have a lot of horsepower to improve the speed of their already virtualized code compiling systems. My plan of attack was simple. Address the CPU bound systems with more powerful blades, and scale up the VMs accordingly. Budget constraints axed the storage array included in my proposal, and also kept this effort limited to keeping the same number of vSphere hosts for the task.

The four new Dell M620 blades arrived and were quickly built up with vSphere 5.0 U2 (Enterprise Plus Licensing) with the EqualLogic MEM installed. A separate cluster was created to insure all build systems were kept separate, and so that I didn’t have to mask any CPU features to make them work with previous generation blades. Next up was to make sure each build VM was running VM hardware level 8. Prior to vSphere 5, the guest VM was unaware of the NUMA architecture behind it. Without the guest OS understanding memory locality, one could introduce problems into otherwise efficient processes. While I could find no evidence that the compilers for either OS are NUMA aware, I knew the Operating Systems understood NUMA.

Each build VM has a separate vmdk for its compiling activities. Their D:\ drive (or /home for Linux) is where the local sandboxes live. I typically have this second drive on a “Virtual Device Node” changed to something other than 0:x. This has proven beneficial in previous performance optimization efforts.

I figured the testing would be somewhat trivial, and would be wrapped up in a few days. After all, the blades were purchased to quickly deliver CPU power for a production environment, and I didn’t want to hold that up. But the data the tests returned had some interesting surprises. It is not every day that you get to test 16vCPU VMs for a production environment that can actually use the power. My home lab certainly doesn’t allow me to do this, so I wanted to make this count.

Testing

The baseline tests would be to run code compiling on two of the production build systems (one Linux, and the other Windows) on an old blade, then the same set with the same source code on the new blades. This would help in better understanding if there were speed improvements from the newer generation chips. Most of the existing build VMs are similar in their configuration. The two test VMs will start out with 4vCPUs and 4GB of RAM. Once the baselines were established, the virtual resources of each VM would be dialed up to see how they respond. The systems will be compiling the very same source code.

For the tests, I isolated each blade so they were not serving up other needs. The test VMs resided in an isolated datastore, but lived on a group of EqualLogic arrays that were part of the production environment. Tests were run at all times during the day and night to simulate real world scenarios, as well as demonstrate any variability in SAN performance.

Build times would be officially recorded in the Developers Build Dashboard. All resources would be observed in vSphere in real time, with screen captures made of things like CPU, disk and memory, and dumped into my favorite brain-dump application; Microsoft OneNote. I decided to do this on a whim when I began testing, but it immediately proved incredibly valuable later on as I found myself looking at dozens of screen captures constantly.

The one thing I didn’t have time to test was the nearly limitless possible scenarios in which multiple monster VMs were contending for CPUs at the same time. But the primary interest for now was to see how the build systems scaled. I would then make my sizing judgments off of the results, and off of previous experience with smaller build VMs on smaller hosts.

The [n/n] title of each test result column indicates the number of vCPUs followed by the amount of vRAM associated. Stacked bar graphs show a lighter color at the top of each bar. This indicates the difference in time between the best result and the worst result. The biggest factor of course would be the SAN.

Bottleneck cat and mouse

Performance testing is a great exercise for anyone, because it helps challenge your own assumptions on where the bottleneck really is. No resource lives as an island, and this project showcased that perfectly. Improving the performance of these CPU bound systems may very well shift the contention elsewhere. However, it may expose other bottlenecks that you were not aware of, as resources are just one element of bottleneck chasing. Applications and the Operating Systems they run on are not perfect, nor are the scripts that kick them off. Keep this in mind when looking at the results.

Test Results – Windows

The following are test results are with Windows 7, running the Visual Studio Compiler. Showing three generations of blades. The Dell M600 (HarperTown), M610, (Nehalem), and M620 (SandyBridge).

Comparing a Windows code compile across blades without any virtual resource modifications.

Yes, that is right. The old M600 blades were that terrible when it came to running VMs that were compiling. This would explain the inconsistent build time results we had seen in the past. While there was improvement in the M620 over the M610s, the real power of the M620s is that they have double the number of physical cores (16) than the previous generations. Also noteworthy is the significant impact the SAN (up to 50%) was affecting the end result.

Comparing a Windows code compile on new blade, but scaling up virtual resources

Several interesting observations about this image (above).

- When the SAN can’t keep up, it can easily give back the improvements made in raw compute power.

- Performance degraded when compiling with more than 8vCPUs. It was so bad that I quit running tests when it became clear they weren’t compiling efficiently (which is why you do not see SAN variability when I started getting negative returns)

- Doubling the vCPUs from 4 to 8, and the vRAM from 4 to 8 only improved the build time by about 30%, even though the compile showed nearly perfect multithreading (shown below) and 100% CPU usage. Why the degradation? Keep reading!

- On a different note, it was becoming quite clear already I needed to take a little corrective action in my testing. The SAN was being overworked at all times of the day, and it was impacting my ability to get accurate test results in raw compute power. The more samples I ran the more consistent the inconsistency was. Each of the M620’s had a 100GB SSD, so I decided to run the D:\ drive (where the build sandbox lives) on there to see a lack of storage contention impacted times. The purple line indicates the build times of the given configuration, but with the D:\ drive of the VM living on the local SSD drive.

The difference between a slow run on the SAN and a run with faster storage was spreading.

Test Results – Linux

The following are test results are with Linux, running the GCC compiler. Showing three generations of blades. The Dell M600 (HarperTown), M610, (Nehalem), and M620 (SandyBridge).

Comparing a Linux code compile across blades without any virtual resource modifications.

The Linux compiler showed a a much more linear improvement, along with being faster than it’s Windows counterpart. Noticeable improvements across the newer generations of blades, with no modifications in virtual resources. However, the margin of variability from the SAN is a concern.

Comparing a Linux code compile on new blade, but scaling up virtual resources

At first glance it looks as if the Linux GCC compiler scales up well, but not in a linear way. But take a look at the next graph, where similar to the experiment with the Windows VM, I changed the location of the vmdk file used for the /home drive (where the build sandbox lives) over to the local SSD drive.

This shows very linear scalability with Linux and a GCC compiler. A 4vCPU with 4GB RAM was able to compile 2.2x faster with 8vCPUs and 8GB of RAM. Total build time was just 12 minutes. Triple the virtual resources to 12/12, and it is an almost linear 2.9x faster than the original configuration. Bump it up to 16vCPUs, and diminishing returns begin to show up, where it is 3.4x faster than the original configuration. I suspect crossing NUMA nodes and the architecture of the code itself was impacting this a bit. Although, don’t lose sight of the fact that a build that could take up to 45 minutes on the old configuration took only 7 minutes with 16vCPUs.

The big takeaways from these results are the differences in scalability in compilers, and how overtaxed the storage is. Lets take a look at each one of these.

The compilers

Internally it had long been known that Linux compiled the same code faster than Windows. Way faster. But for various reasons it had been difficult to pinpoint why. The data returned made it obvious. It was the compiler.

While it was clear that the real separation in multithreaded compiling occurred after 8vCPUs, the real problem with the Windows Visual Studio compiler begins after 4vCPUs. This surprised me a bit because when monitoring the vCPU usage (in stacked graph format) in vCenter, it was using every CPU cycle given to it, and multithreading quite evenly. The testing used Visual Studio 2008, but I also tested newer versions of Visual Studio, with nearly the same results.

Storage

The original proposal included storage to support the additional compute horsepower. The existing set of arrays had served our needs very well, but were really targeted at general purpose I/O needs with a focus of capacity in mind. During the budget review process, I had received many questions as to why we needed a storage array. Boiling it down to even the simplest of terms didn’t allow for that line item to survive the last round of cuts. Sure, there was a price to pay for the array, but the results show there is a price to pay for not buying the array.

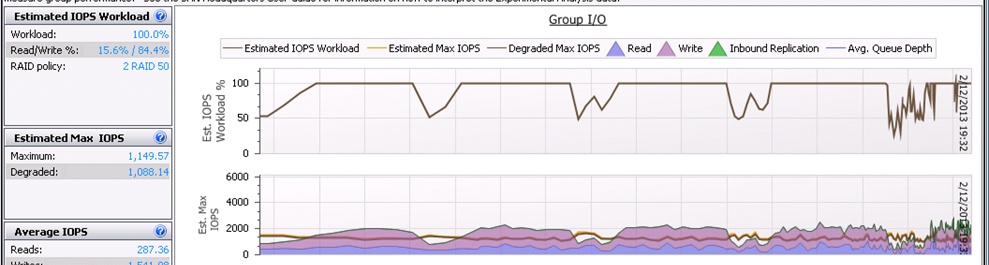

I knew storage was going to be an issue, but when contention occurs, its hard to determine how much of an impact it will have. Think of a busy freeway, where throughput is pretty easy to predict up to a certain threshold. Hit critical mass, and predicting commute times becomes very difficult. Same thing with storage. But how did I know storage was going to be an issue? The free tool provided to all Dell EqualLogic customers; SAN HQ. This tool has been a trusted resource for me in the past, and removes ALL speculation when it comes to historical usage of the arrays, and other valuable statistics. IOPS, read/write ratios, latency etc. You name it.

Historical data of Estimated Workload over the period of 1 month

Historical data of Estimated Workload over the period of 12 months

Both images show that with the exception of weekends, the SAN arrays are maxed out to 100% of their estimated workload. The overtaxing shows up on the lower part of each screen capture the read and writes surpassing the brown line indicating the estimated maximum IOPS of the array. The 12 month history showed that our storage performance needs were trending upward.

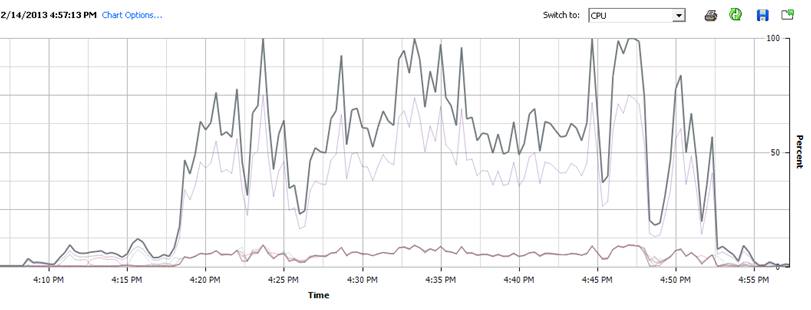

Storage contention and how it relates to used CPU cycles is also worth noting. Look at how inadequate storage I/O influences compute. The image below shows the CPU utilization for one of the Linux builds using 8vCPUs and 8GB RAM when the /home drive was using fast storage (the local SSD on the vSphere host)

Now look at the same build when running against a busy SAN array. It completely changes the CPU usage profile, and thus took 46% longer to complete.

General Observations and lessons

- If you are running any hosts using pre-Nehalem architectures, now is a good time to question why. They may not be worth wasting vSphere licensing on. The core count and architectural improvements on the newer chips put the nails in the coffin on these older chips.

- Storage Storage Storage. If you have CPU intensive operations, deal with the CPU, but don’t neglect storage. The test results above demonstrate how one can easily give back the entire amount of performance gains in CPU by not having storage performance to support it.

- Giving a Windows code compiling VM a lot of CPU, but not increasing the RAM seemed to make the compiler trip on it’s own toes. This makes sense, as more CPUs need more memory addresses to work with.

- The testing showcased another element of virtualization that I love. It often helps you understand problems that you might otherwise be blind to. After establishing baseline testing, I noticed some of the Linux build systems were not multithreading the way they should. Turns out it was some scripting errors by our Developers. Easily corrected.

Conclusion

The new Dell M620 blades provided an immediate performance return. All of the build VMs have been scaled up to 8vCPUs and 8GB of RAM to get the best return while providing good scalability of the cluster. Even with that modest doubling of virtual resources, we now have nearly 30 build VMs that when storage performance is no longer an issue, will run between 4 and 4.3 times faster than the same VMs on the old M600 blades. The primary objective moving forward is to target storage that will adequately support these build VMs, as well as looking into ways to improve multithreaded code compiling in Windows.

Helpful Links

Kitware blog post on multithreaded code compiling options

http://www.kitware.com/blog/home/post/434